Application-Specific LLM Evaluations

Measure what Matters

Standard benchmarks don't predict real user success

General evaluations and test prompts don't tell you if users will actually succeed with your product. Mandoline helps you evaluate what matters: can users accomplish their goals? Our user-focused evaluation framework measures real-world task performance.

LLM behavior varies unpredictably across models and time

Different models handle the same prompts differently, and behavior can change with each update. Mandoline gives you insight into how models perform on your specific use cases, helping you catch issues early and make informed decisions about which models to use.

It's hard to know if changes really improved the product

Traditional A/B testing doesn't work well for LLM product development. Mandoline's LLM evaluation framework helps you measure the impact of prompt engineering, fine-tuning, and product changes. Know with confidence if your changes made things better or worse.

How it Works

Simple, scalable, flexible pricing

EVALUATION TYPE | COST / EVAL ($) |

|---|---|

Text | 0.03 |

Vision | 0.04 |

Built by LLM developers for LLM Developers

We've experienced firsthand the challenges of evaluating and improving AI systems in real-world contexts. We built Mandoline to address these pain points.

Our goal is to help other AI product teams build more useful LLM-powered applications, ultimately leading to improved user experiences.

Frequently asked questions

Can’t find what you’re looking for? Reach out to us at support@mandoline.ai.

What is Mandoline?

Mandoline helps developers evaluate and improve LLM applications in ways that matter to users. It bridges the gap between abstract model performance and real-world usefulness by enabling custom, application-specific evaluations of LLM outputs. Learn more in our Core Concepts guide.

How does Mandoline differ from traditional LLM evaluation methods?

Unlike traditional methods that often focus on generic metrics, Mandoline emphasizes user-centric, application-specific evaluation. It allows you to create custom metrics tailored to your use case, evaluate LLM outputs in real-world contexts, and track improvements over time. See our Quick Start example for a practical example.

How can I get started with Mandoline?

Simply sign up for an account, integrate our API, and customize your evaluation settings. Our Getting Started guide and developer-friendly documentation are here to help you every step of the way.

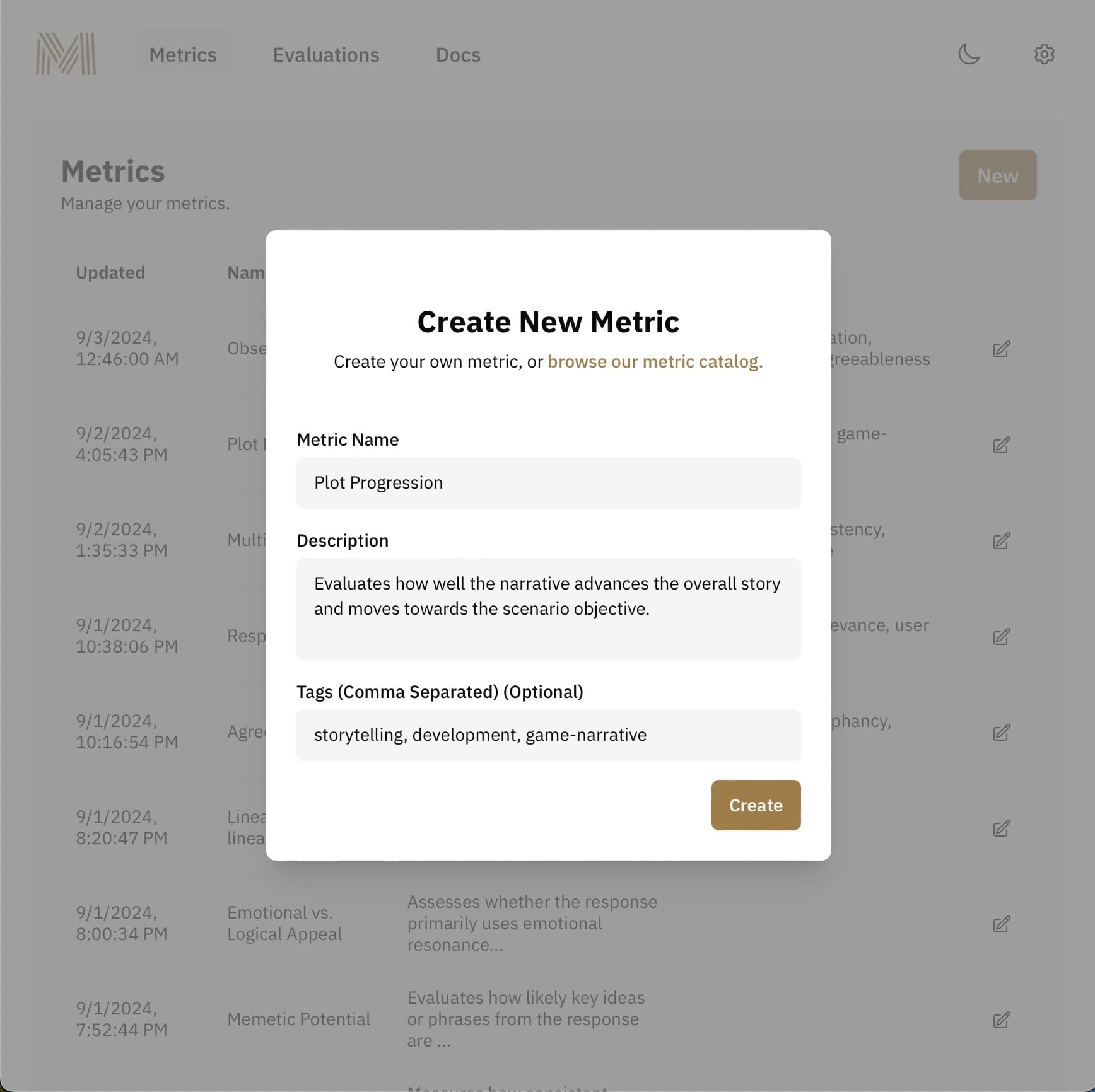

What are custom metrics in Mandoline and how do I create them?

Custom metrics in Mandoline are user-defined evaluation criteria that measure specific LLM behaviors or outputs relevant to your application. You can create a custom metric using the Mandoline API by defining a name, description, and optional tags. Check our Metrics explainer to learn more.

How does Mandoline handle large volumes of data?

Mandoline is designed to efficiently apply custom metrics across a large number of LLM outputs. It uses offset-based pagination for listing metrics and evaluations, allowing you to process large datasets effectively. This scalability helps you to perform detailed evaluations on substantial amounts of data, giving you a more robust understanding of your LLM's performance across various scenarios. Learn more about our pagination system in our API docs.

How can I compare different LLMs for my task using Mandoline?

Mandoline enables model comparison by allowing you to create custom metrics tailored to your use case. You can then use these metrics to evaluate and compare different LLMs systematically. Mandoline provides tools for analysis of model performance across various dimensions, helping you make decisions about which model performs best for your specific requirements. Our Model Selection tutorial walks you through this process step-by-step.

Can Mandoline help with prompt engineering?

Yes, Mandoline can be used to test different prompt structures and analyze their effectiveness. You can create metrics to evaluate the quality of responses generated from different prompts, allowing you to identify which prompt structures work best for your specific use case. This helps in reducing unwanted behaviors in LLM responses and improving the overall user experience by refining the way you interact with the AI model. Check out our Prompt Engineering tutorial for a practical guide.